Contents

All blogs / Ultimate Volleyball: A multi-agent reinforcement learning environment built using Unity ML-Agents

Ultimate Volleyball: A multi-agent reinforcement learning environment built using Unity ML-Agents

August 11, 2021 • Joy Zhang • Resources • 5 minutes

Inspired by Slime Volleyball Gym, I built a 3D Volleyball environment using Unity's ML-Agents toolkit. The full project is open-source and available at: 🏐 Ultimate Volleyball.

In this article, I share an overview of the implementation details, challenges, and learnings from designing the environment to training an agent in it. For a background on ML-Agents, please check out my Introduction to ML-Agents article.

Versions used: Release 18 (June 9, 2021)

Python package: 0.27.0

Unity package: 2.1.0

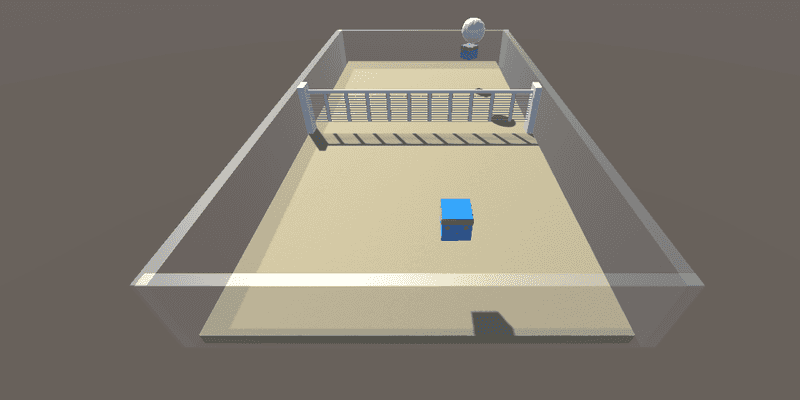

🥅 Setting up the court

Unity’s wide library of free assets and sample projects makes it easy to create a simple environment.

Here’s what I used:

- Agent Cube prefabs from the ML-Agents sample projects

- Volleyball prefab & sand material from free Beach Essentials Asset Pack

- Net material from Free Grids & Nets Materials Pack

The rest of the court (net posts, walls, goal, and floor) were all just resized and rotated cube objects pieced together.

The floor is actually made up of 2 layers:

- Thin purple and blue-side goals on top with a ‘trigger’ collider

- A walkable floor below

The goals detect when the ball hits the floor, while the walkable floor provides collision physics for the ball.

Some other implementation details to note:

- The agents look like cubes, but have sphere colliders to help them control the ball trajectory.

- I also added an invisible boundary around the court. I found that during training, agents may shy away from learning to hit the ball at all if you penalize them for hitting the ball out of bounds.

📃 Scripts

In Unity, scripts can be assigned to various game objects to control their behavior. Below is a brief overview of the 4 scripts used to define the environment.

VolleyballAgent.cs

Attached to the Agents

This controls both the collection of observations and actions for the agents.

public override void CollectObservations(VectorSensor sensor)

{

// Agent rotation (1 float)

sensor.AddObservation(this.transform.rotation.y);

// Vector from agent to ball (direction to ball) (3 floats)

Vector3 toBall = new Vector3((ballRb.transform.position.x - this.transform.position.x)*agentRot,

(ballRb.transform.position.y - this.transform.position.y),

(ballRb.transform.position.z - this.transform.position.z)*agentRot);

sensor.AddObservation(toBall.normalized);

// Distance from the ball (1 float)

sensor.AddObservation(toBall.magnitude);

// Agent velocity (3 floats)

sensor.AddObservation(agentRb.velocity);

// Ball velocity (3 floats)

sensor.AddObservation(ballRb.velocity.y);

sensor.AddObservation(ballRb.velocity.z*agentRot);

sensor.AddObservation(ballRb.velocity.x*agentRot);

}VolleyballController.cs

Attached to the ball

This script checks whether the ball has hit the floor. If so, it triggers the allocation of rewards in VolleyballEnv.cs.

/// <summary>

/// Checks whether the ball lands in the blue or purple goal

/// </summary>

void OnTriggerEnter(Collider other)

{

if (other.gameObject.CompareTag("purpleGoal"))

{

envController.ResolveGoalEvent(GoalEvent.HitPurpleGoal);

}

else if (other.gameObject.CompareTag("blueGoal"))

{

envController.ResolveGoalEvent(GoalEvent.HitBlueGoal);

}

}VolleyballEnv.cs

Attached to the parent volleyball area (which contains the agents, ball, etc.)

This script contains all the logic for managing the starting/stopping of the episode, how objects are spawned, and how rewards should be allocated.

/// <summary>

/// Resolves which agent should score

/// </summary>

public void ResolveGoalEvent(GoalEvent goalEvent)

{

if (goalEvent == GoalEvent.HitPurpleGoal)

{

purpleAgent.AddReward(1f);

blueAgent.AddReward(-1f);

StartCoroutine(GoalScoredSwapGroundMaterial(volleyballSettings.purpleGoalMaterial, purpleGoalRenderer, .5f));

}

else if (goalEvent == GoalEvent.HitBlueGoal)

{

blueAgent.AddReward(1f);

purpleAgent.AddReward(-1f);

StartCoroutine(GoalScoredSwapGroundMaterial(volleyballSettings.blueGoalMaterial, blueGoalRenderer, .5f));

}

blueAgent.EndEpisode();

purpleAgent.EndEpisode();

ResetScene();

}VolleyballSettings.cs

Attached to a separate ‘VolleyballSettings’ object

This holds constants for basic environment settings, e.g. agent run speed and jump height.

public class VolleyballSettings : MonoBehaviour

{

public float agentRunSpeed = 1.5f;

public float agentJumpHeight = 2.75f;

public float agentJumpVelocity = 777;

public float agentJumpVelocityMaxChange = 10;

}🤖 Agents

The environment is designed to be symmetric so that both agents can share the same model and be trained with Self-Play.

I decided to start simple with vector observations. These are all defined relative to the agent, so it doesn’t matter whether the agent is playing as the blue or purple cube.

Observations

Total observation space size: 11

- Rotation (Y-axis) - 1

- 3D Vector from agent to the ball - 3

- Distance to the ball - 1

- Agent velocity (X, Y & Z-axis) - 3

- Ball velocity (X, Y & Z-axis) - 3

Note: it’s pretty unrealistic for an agent to know such direct observations about its environment. Further improvements could involve adding Raycasts, opponent positions (to encourage competitive plays), and normalizing the values (to help training converge faster).

Available actions

4 discrete branches:

- Move forward, backward, stay still (size: 3)

- Move left, right, stay still (size: 3)

- Rotate left, right, stay still (size: 3)

- Jump, no jump (size: 2)

🍭 Rewards

For the purposes of training a simple agent to volley, I chose a simple reward of +1 for hitting the ball over the net. As you’ll see in the next section, this worked relatively well and no further reward shaping was needed.

If you plan on training a competitive agent or using Self-Play, the ML-Agents documentation suggests keeping the rewards simple (+1 for the winner, -1 for the loser) and allowing for more training iterations to compensate.

🏋️♀️ Training

For training, I chose to use PPO simply because it was more straightforward and likely to be stable.

Unity ML-Agents provides an implementation of the PPO algorithm out of the box (others include GAIL, SAC, POCA). All you need to provide are the hyperparameters in a YAML config file:

behaviors:

Volleyball:

trainer_type: ppo

hyperparameters:

batch_size: 2048

buffer_size: 20480

learning_rate: 0.0002

beta: 0.003

epsilon: 0.15

lambd: 0.93

num_epoch: 4

learning_rate_schedule: constant

network_settings:

normalize: true

hidden_units: 256

num_layers: 2

vis_encode_type: simple

reward_signals:

extrinsic:

gamma: 0.96

strength: 1.0

keep_checkpoints: 5

max_steps: 20000000

time_horizon: 1000

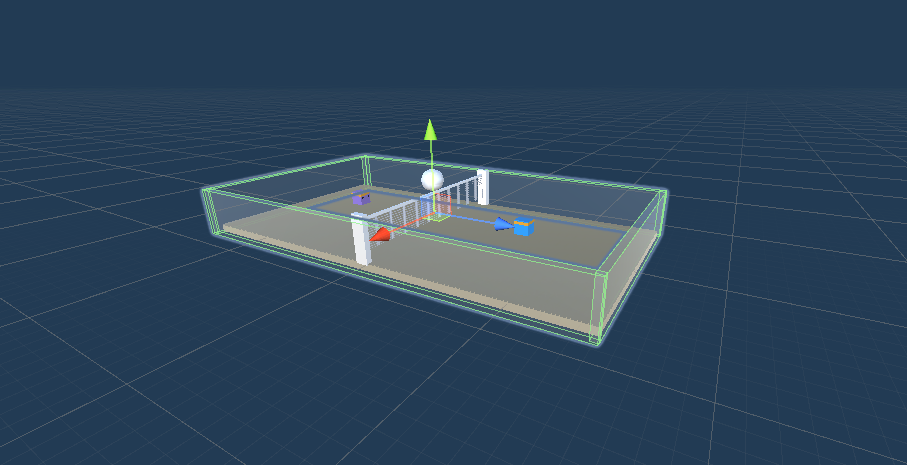

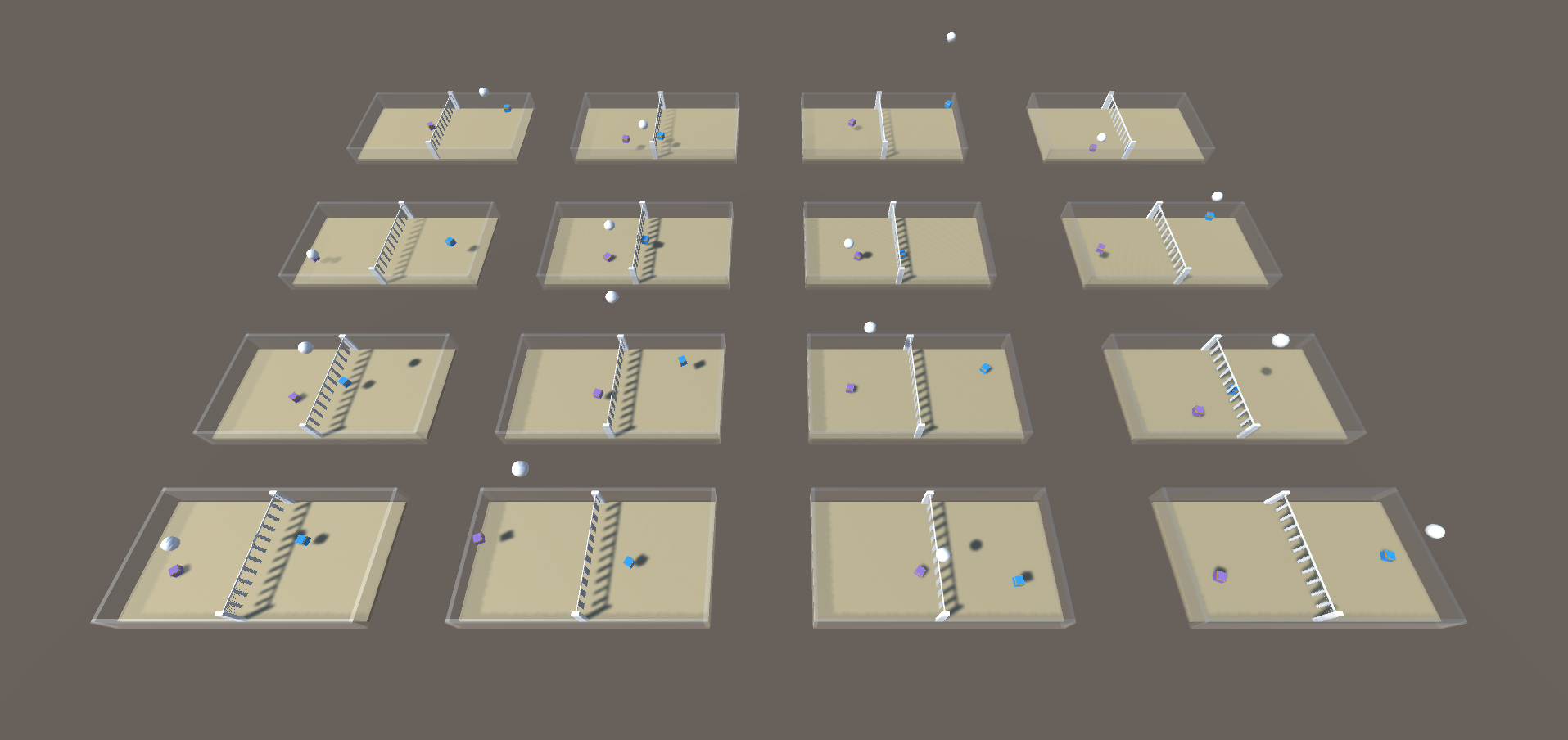

summary_freq: 20000To speed up training, I created 16 duplicates of the environment which all contribute to training the same model in parallel:

Unity ML-Agents does a great job of abstracting out all the boilerplate code needed to do reinforcement learning. The terminal command for training will look similar to:

mlagents-learn .\config\Volleyball.yaml --run-id=VB_01 --time-scale=1Note: ML-Agents defaults to a time scale of 20 to speed up training. Explicitly setting the flag --time-scale=1 is important because the physics in this environment are time-dependant.

With this setup, it took ~7 hours to train 20 million steps. By this stage, the agents are able to almost perfectly volley back and forth!

👟 Next steps

Thanks for reading!

I hope this article gave you a good idea of the process behind designing and training reinforcement learning agents in Unity ML-Agents. For anyone interested in a hands-on project with reinforcement learning, or looking for a way to create complex and physics-rich RL environments, I highly recommend giving Unity ML-Agents a go.

Please do check out the environment and if you’re interested, submit your own agent to compete against others!

📚 Resources

For others looking to build their own environments, I found these resources helpful:

- Hummingbirds Course by Unity (unfortunately outdated, but still useful).

- ML-Agents Sample Environments (particularly the Soccer & Wall Jump environments)